Introduction

Robots are envisioned to bring improved efficiency and quality to many aspects of our daily lives. Examples include autonomous driving, drone delivery, and service robots. The decision-making in robotic systems often faces various sources of uncertainties (e.g., incomplete sensory information, uncertainties in the environment, interaction with other agents). As data- and learning-based methods continue to gain traction, we must understand how to leverage them in real-world robotic systems in a safe and robust manner to avoid costly hardware failures and/or allow for deployments in the proximity of human operators. Addressing the safety challenges in real-world deployment will require cross-field collaboration. Our goal is to facilitate enduring interdisciplinary partnerships and build a community fostering the advancement of safe robot autonomy research.

Join our mailing list and safe-robot-learning Google group to stay connected!

Review Paper and Simulation Benchmark

Review Paper on Safe Learning in Robotics

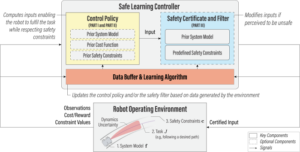

Abstract: As data- and learning-based methods gain traction, researchers must also understand how to leverage them in real-world robotic systems, where implementing and guaranteeing safety is imperative—to avoid costly hardware failures and allow deployment in the proximity of human operators. The last half-decade has seen a steep rise in the number of contributions to this area from both the control and reinforcement learning communities. Our goal is to provide a concise but holistic review of the recent advances made in safe learning control. We aim to demystify and unify the language and frameworks used in control theory and reinforcement learning research. To facilitate fair comparisons between these fields, we emphasize the need for realistic physics-based benchmarks. Our review includes learning-based control approaches that safely improve performance by learning the uncertain dynamics, reinforcement learning approaches that encourage safety or robustness, and methods that can formally certify the safety of a learned control policy.

Simulation Benchmark for Safe Robot Learning

Abstract: In recent years, reinforcement learning and learning-based control—as well as the study of their safety, crucial for deployment in real-world robots—have gained significant traction. However, to adequately gauge the progress and applicability of new results, we need the tools to equitably compare the approaches proposed by the controls and reinforcement learning communities. Here, we propose a new open-source benchmark suite, called safe-control-gym. Our starting point is OpenAI’s Gym API, which is one of the de facto standard in reinforcement learning research. Yet, we highlight the reasons for its limited appeal to control theory researchers—and safe control, in particular. E.g., the lack of analytical models and constraint specifications. Thus, we propose to extend this API with (i) the ability to specify (and query) symbolic models and constraints and (ii) introduce simulated disturbances in the control inputs, measurements, and inertial properties. We provide implementations for three dynamic systems—the cart-pole, 1D, and 2D quadrotor—and two control tasks—stabilization and trajectory tracking. To demonstrate our proposal—and in an attempt to bring research communities closer together—we show how to use safe-control-gym to quantitatively compare the control performance, data efficiency, and safety of multiple approaches from the areas of traditional control, learning-based control, and reinforcement learning.

Upcoming Events

Workshop on Semantics for Robotics

Interdisciplinary workshop on semantics for robotics: from environment understanding and reasoning to safe interaction.

Speakers

Prof. Michael Milford

Prof. Luca Carlone

Prof. Angela Dai

Dr. Oier Mees

Dr. Masha Itkina

Prof. Marco Pavone

Prof. Andrea Bajcsy

Prof. Koushil Sreenath

Dr. Federico Tombari

Dr. Manuel Keppler

CDC Learning-Based Control Invited Sessions

Advances in learning for dynamical systems.

Invited Sessions

TBD

Organizers

Angela Schoellig

Sebastian Trimpe

Melanie Zeilinger

Matthias Müller

Related Resources

Talks and Discussions

Angela Schoellig, “safe-control-gym: A Unified Benchmark Suite for Safe Learning-Based Control and Reinforcement Learning,” ICRA 2022 Tutorial on Tools for Robotic Reinforcement Learning

Angela Schoellig, “Machine Learning for Robotics: Achieving Safety, Performance and Reliability by Combining Models and Data in a Closed-Loop System Architecture,” Intersections between Control, Learning and Optimization 2020

Angela Schoellig, “Safe Learning-based Control Using Gaussian Processes,” IFAC World Congress 2020

Past Events

Workshop on Benchmarking, Reproducibility, and Open-Source Code in Controls, CDC 2023

IROS Safe Robot Learning Competition, IROS 2022

ICRA Workshop on Releasing Robots into the Wild, ICRA 2022

Workshop on Deployable Decision Making in Embodied Systems, NeurIPS 2021

Workshop on Safe Real-World Robot Autonomy, IROS 2021

Workshop on Robotics for People (R4P): Perspectives on Interaction, Learning and Safety, RSS 2021

Invited Sessions on Learning-Based Control, CDC 2016-present

Workshop on Structured Approaches to Robot Learning for Improved Generalization, RSS 2020

Workshop on Algorithms & Architectures for Learning in-the-Loop Systems, ICRA 2019

Machine Learning in Planning and Control of Robot Motion Workshop II, IROS 2015

Machine Learning in Planning and Control of Robot Motion Workshop I, IROS 2014

Main Contributors

Collaborators

Somil Bansal, University of Southern California

Animesh Garg, University of Toronto

Matthias Müller, Leibniz University Hannover

Davide Scaramuzza, University of Zürich

Sebastian Trimpe, RWTH Aachen University

Melanie Zeilinger, ETH Zürich