Semantic Control for Robotics

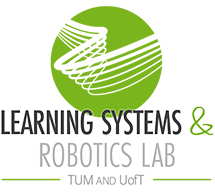

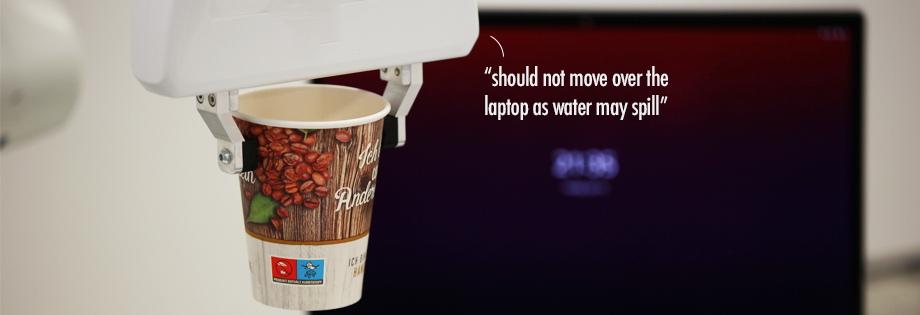

For robots to safely interact with people and the real world, they need the capability to not only perceive but also understand their surroundings in a semantically meaningful way (i.e., understanding implications or pertinent properties associated with the objects in the scene). Advanced perception methods coupled with learning algorithms have made significant progress in enabling semantic understanding. Recent breakthroughs in foundation models have further exposed opportunities for robots to contextually reason about their operating environments. Reliably exploiting semantic information in embodied systems requires tightly coupled perception, learning, and control algorithm design. In this project, we aim to close the perception-action loop and develop theoretical foundations and algorithms that allow robots to make semantically safe and intelligent decisions in everyday scenarios.

Related Publications

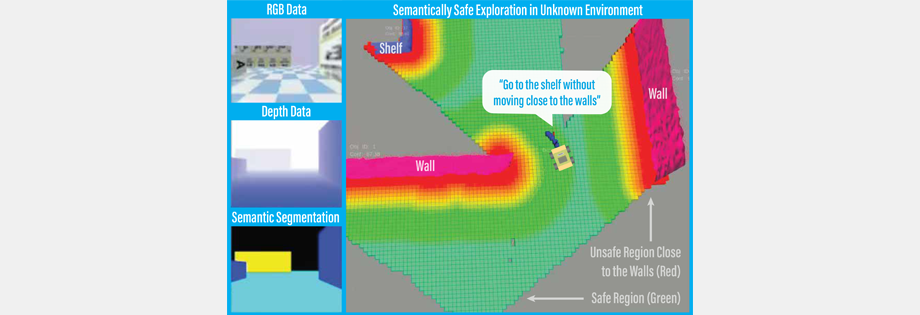

Autonomous robots navigating in changing environments demand adaptive navigation strategies for safe long-term operation. While many modern control paradigms offer theoretical guarantees, they often assume known extrinsic safety constraints, overlooking challenges when deployed in real-world environments where objects can appear, disappear, and shift over time. In this paper, we present a closed-loop perception-action pipeline that bridges this gap. Our system encodes an online-constructed dense map, along with object-level semantic and consistency estimates into a control barrier function (CBF) to regulate safe regions in the scene. A model predictive controller (MPC) leverages the CBF-based safety constraints to adapt its navigation behaviour, which is particularly crucial when potential scene changes occur. We test the system in simulations and real-world experiments to demonstrate the impact of semantic information and scene change handling on robot behavior, validating the practicality of our approach.

@inproceedings{qian-icra24,

author={Jingxing Qian and Siqi Zhou and Nicholas Jianrui Ren and Veronica Chatrath and Angela P. Schoellig},

booktitle = {{Proc. of the IEEE International Conference on Robotics and Automation (ICRA)}},

title={Closing the Perception-Action Loop for Semantically Safe Navigation in Semi-Static Environments},

year={2024},

note={Accepted},

abstract = {Autonomous robots navigating in changing environments demand adaptive navigation strategies for safe long-term operation. While many modern control paradigms offer theoretical guarantees, they often assume known extrinsic safety constraints, overlooking challenges when deployed in real-world environments where objects can appear, disappear, and shift over time. In this paper, we present a closed-loop perception-action pipeline that bridges this gap. Our system encodes an online-constructed dense map, along with object-level semantic and consistency estimates into a control barrier function (CBF) to regulate safe regions in the scene. A model predictive controller (MPC) leverages the CBF-based safety constraints to adapt its navigation behaviour, which is particularly crucial when potential scene changes occur. We test the system in simulations and real-world experiments to demonstrate the impact of semantic information and scene change handling on robot behavior, validating the practicality of our approach.}

}