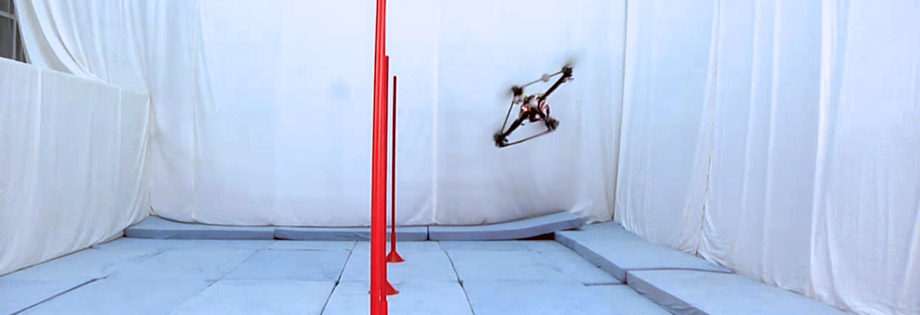

Aerial and Ground Robot Racing

This project explores the physical limits of ground and aerial robots. When operating robots in these regimes, unknown dynamic effects (for example, unmodelled robot dynamics or unmodelled interactions with the environment) can significantly corrupt the robot’s performance. To compensate for such effects, we apply learning-based control approaches that adapt both the reference trajectory and the feedback controller over time. The result is significantly better tracking accuracy at increased speeds.

Drone Racing Competition @ IROS 2022

See competition details here. The competition description paper can be found here. The GitHub repo can be found here.

Related Publications

A time-optimal speed schedule results in a mobile robot driving along a planned path at or near the limits of the robot’s capability. However, deriving models to predict the effect of increased speed can be very difficult. In this paper, we present a speed scheduler that uses previous experience, instead of complex models, to generate time-optimal speed schedules. The algorithm is designed for a vision-based, path-repeating mobile robot and uses experience to ensure reliable localization, low path-tracking errors, and realizable control inputs while maximizing the speed along the path. To our knowledge, this is the first speed scheduler to incorporate experience from previous path traversals in order to address system constraints. The proposed speed scheduler was tested in over 4 km of path traversals in outdoor terrain using a large Ackermann-steered robot travelling between 0.5 m/s and 2.0 m/s. The approach to speed scheduling is shown to generate fast speed schedules while remaining within the limits of the robot’s capability.

@INPROCEEDINGS{ostafew-crv14,

author = {Chris J. Ostafew and Angela P. Schoellig and Timothy D. Barfoot and J. Collier},

title = {Speed daemon: experience-based mobile robot speed scheduling},

booktitle = {{Proc. of the International Conference on Computer and Robot Vision (CRV)}},

pages = {56-62},

year = {2014},

doi = {10.1109/CRV.2014.16},

urlvideo = {https://youtu.be/Pu3_F6k6Fa4?list=PLC12E387419CEAFF2},

abstract = {A time-optimal speed schedule results in a mobile robot driving along a planned path at or near the limits of the robot's capability. However, deriving models to predict the effect of increased speed can be very difficult. In this paper, we present a speed scheduler that uses previous experience, instead of complex models, to generate time-optimal speed schedules. The algorithm is designed for a vision-based, path-repeating mobile robot and uses experience to ensure reliable localization, low path-tracking errors, and realizable control inputs while maximizing the speed along the path. To our knowledge, this is the first speed scheduler to incorporate experience from previous path traversals in order to address system constraints. The proposed speed scheduler was tested in over 4 km of path traversals in outdoor terrain using a large Ackermann-steered robot travelling between 0.5 m/s and 2.0 m/s. The approach to speed scheduling is shown to generate fast speed schedules while remaining within the limits of the robot's capability.},

note = {Best Robotics Paper Award}

} ![]() Learning-based nonlinear model predictive control to improve vision-based mobile robot path-tracking in challenging outdoor environmentsC. J. Ostafew, A. P. Schoellig, and T. D. Barfootin Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2014, pp. 4029-4036.

Learning-based nonlinear model predictive control to improve vision-based mobile robot path-tracking in challenging outdoor environmentsC. J. Ostafew, A. P. Schoellig, and T. D. Barfootin Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2014, pp. 4029-4036.

![]()

![]()

![]()

![]()

This paper presents a Learning-based Nonlinear Model Predictive Control (LB-NMPC) algorithm for an autonomous mobile robot to reduce path-tracking errors over repeated traverses along a reference path. The LB-NMPC algorithm uses a simple a priori vehicle model and a learned disturbance model. Disturbances are modelled as a Gaussian Process (GP) based on experience collected during previous traversals as a function of system state, input and other relevant variables. Modelling the disturbance as a GP enables interpolation and extrapolation of learned disturbances, a key feature of this algorithm. Localization for the controller is provided by an on-board, vision-based mapping and navigation system enabling operation in large-scale, GPS-denied environments. The paper presents experimental results including over 1.8 km of travel by a four-wheeled, 50 kg robot travelling through challenging terrain (including steep, uneven hills) and by a six-wheeled, 160 kg robot learning disturbances caused by unmodelled dynamics at speeds ranging from 0.35 m/s to 1.0 m/s. The speed is scheduled to balance trial time, path-tracking errors, and localization reliability based on previous experience. The results show that the system can start from a generic a priori vehicle model and subsequently learn to reduce vehicle- and trajectory-specific path-tracking errors based on experience.

@INPROCEEDINGS{ostafew-icra14,

author = {Chris J. Ostafew and Angela P. Schoellig and Timothy D. Barfoot},

title = {Learning-based nonlinear model predictive control to improve vision-based mobile robot path-tracking in challenging outdoor environments},

booktitle = {{Proc. of the IEEE International Conference on Robotics and Automation (ICRA)}},

pages = {4029-4036},

year = {2014},

doi = {10.1109/ICRA.2014.6907444},

urlvideo = {https://youtu.be/MwVElAn95-M?list=PLC12E387419CEAFF2},

abstract = {This paper presents a Learning-based Nonlinear Model Predictive Control (LB-NMPC) algorithm for an autonomous mobile robot to reduce path-tracking errors over repeated traverses along a reference path. The LB-NMPC algorithm uses a simple a priori vehicle model and a learned disturbance model. Disturbances are modelled as a Gaussian Process (GP) based on experience collected during previous traversals as a function of system state, input and other relevant variables. Modelling the disturbance as a GP enables interpolation and extrapolation of learned disturbances, a key feature of this algorithm. Localization for the controller is provided by an on-board, vision-based mapping and navigation system enabling operation in large-scale, GPS-denied environments. The paper presents experimental results including over 1.8 km of travel by a four-wheeled, 50 kg robot travelling through challenging terrain (including steep, uneven hills) and by a six-wheeled, 160 kg robot learning disturbances caused by unmodelled dynamics at speeds ranging from 0.35 m/s to 1.0 m/s. The speed is scheduled to balance trial time, path-tracking errors, and localization reliability based on previous experience. The results show that the system can start from a generic a priori vehicle model and subsequently learn to reduce vehicle- and trajectory-specific path-tracking errors based on experience.}

} ![]() Visual teach and repeat, repeat, repeat: iterative learning control to improve mobile robot path tracking in challenging outdoor environmentsC. J. Ostafew, A. P. Schoellig, and T. D. Barfootin Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013, pp. 176-181.

Visual teach and repeat, repeat, repeat: iterative learning control to improve mobile robot path tracking in challenging outdoor environmentsC. J. Ostafew, A. P. Schoellig, and T. D. Barfootin Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2013, pp. 176-181.

![]()

![]()

![]()

![]()

This paper presents a path-repeating, mobile robot controller that combines a feedforward, proportional Iterative Learning Control (ILC) algorithm with a feedback-linearized path-tracking controller to reduce path-tracking errors over repeated traverses along a reference path. Localization for the controller is provided by an on-board, vision-based mapping and navigation system enabling operation in large-scale, GPS-denied, extreme environments. The paper presents experimental results including over 600 m of travel by a four-wheeled, 50 kg robot travelling through challenging terrain including steep hills and sandy turns and by a six-wheeled, 160 kg robot at gradually-increased speeds up to three times faster than the nominal, safe speed. In the absence of a global localization system, ILC is demonstrated to reduce path-tracking errors caused by unmodelled robot dynamics and terrain challenges.

@INPROCEEDINGS{ostafew-iros13,

author = {Chris J. Ostafew and Angela P. Schoellig and Timothy D. Barfoot},

title = {Visual teach and repeat, repeat, repeat: Iterative learning control to improve mobile robot path tracking in challenging outdoor environments},

booktitle = {{Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}},

pages = {176-181},

year = {2013},

doi = {10.1109/IROS.2013.6696350},

urlvideo = {https://youtu.be/08_d1HSPADA?list=PLC12E387419CEAFF2},

abstract = {This paper presents a path-repeating, mobile robot controller that combines a feedforward, proportional Iterative Learning Control (ILC) algorithm with a feedback-linearized path-tracking controller to reduce path-tracking errors over repeated traverses along a reference path. Localization for the controller is provided by an on-board, vision-based mapping and navigation system enabling operation in large-scale, GPS-denied, extreme environments. The paper presents experimental results including over 600 m of travel by a four-wheeled, 50 kg robot travelling through challenging terrain including steep hills and sandy turns and by a six-wheeled, 160 kg robot at gradually-increased speeds up to three times faster than the nominal, safe speed. In the absence of a global localization system, ILC is demonstrated to reduce path-tracking errors caused by unmodelled robot dynamics and terrain challenges.}

} ![]() Optimization-based iterative learning for precise quadrocopter trajectory trackingA. P. Schoellig, F. L. Mueller, and R. D’AndreaAutonomous Robots, vol. 33, iss. 1-2, pp. 103-127, 2012.

Optimization-based iterative learning for precise quadrocopter trajectory trackingA. P. Schoellig, F. L. Mueller, and R. D’AndreaAutonomous Robots, vol. 33, iss. 1-2, pp. 103-127, 2012.

![]()

![]()

![]()

![]()

Current control systems regulate the behavior of dynamic systems by reacting to noise and unexpected disturbances as they occur. To improve the performance of such control systems, experience from iterative executions can be used to anticipate recurring disturbances and proactively compensate for them. This paper presents an algorithm that exploits data from previous repetitions in order to learn to precisely follow a predefined trajectory. We adapt the feed-forward input signal to the system with the goal of achieving high tracking performance – even under the presence of model errors and other recurring disturbances. The approach is based on a dynamics model that captures the essential features of the system and that explicitly takes system input and state constraints into account. We combine traditional optimal filtering methods with state-of-the-art optimization techniques in order to obtain an effective and computationally efficient learning strategy that updates the feed-forward input signal according to a customizable learning objective. It is possible to define a termination condition that stops an execution early if the deviation from the nominal trajectory exceeds a given bound. This allows for a safe learning that gradually extends the time horizon of the trajectory. We developed a framework for generating arbitrary flight trajectories and for applying the algorithm to highly maneuverable autonomous quadrotor vehicles in the ETH Flying Machine Arena testbed. Experimental results are discussed for selected trajectories and different learning algorithm parameters.

@ARTICLE{schoellig-auro12,

author = {Angela P. Schoellig and Fabian L. Mueller and Raffaello D'Andrea},

title = {Optimization-based iterative learning for precise quadrocopter trajectory tracking},

journal = {{Autonomous Robots}},

volume = {33},

number = {1-2},

pages = {103-127},

year = {2012},

doi = {10.1007/s10514-012-9283-2},

urlvideo={http://youtu.be/goVuP5TJIUU?list=PLC12E387419CEAFF2},

abstract = {Current control systems regulate the behavior of dynamic systems by reacting to noise and unexpected disturbances as they occur. To improve the performance of such control systems, experience from iterative executions can be used to anticipate recurring disturbances and proactively compensate for them. This paper presents an algorithm that exploits data from previous repetitions in order to learn to precisely follow a predefined trajectory. We adapt the feed-forward input signal to the system with the goal of achieving high tracking performance - even under the presence of model errors and other recurring disturbances. The approach is based on a dynamics model that captures the essential features of the system and that explicitly takes system input and state constraints into account. We combine traditional optimal filtering methods with state-of-the-art optimization techniques in order to obtain an effective and computationally efficient learning strategy that updates the feed-forward input signal according to a customizable learning objective. It is possible to define a termination condition that stops an execution early if the deviation from the nominal trajectory exceeds a given bound. This allows for a safe learning that gradually extends the time horizon of the trajectory. We developed a framework for generating arbitrary flight trajectories and for applying the algorithm to highly maneuverable autonomous quadrotor vehicles in the ETH Flying Machine Arena testbed. Experimental results are discussed for selected trajectories and different learning algorithm parameters.}

} ![]() Iterative learning of feed-forward corrections for high-performance trackingF. L. Mueller, A. P. Schoellig, and R. D’Andreain Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012, pp. 3276-3281.

Iterative learning of feed-forward corrections for high-performance trackingF. L. Mueller, A. P. Schoellig, and R. D’Andreain Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012, pp. 3276-3281.

![]()

![]()

![]()

![]()

![]()

We revisit a recently developed iterative learning algorithm that enables systems to learn from a repeated operation with the goal of achieving high tracking performance of a given trajectory. The learning scheme is based on a coarse dynamics model of the system and uses past measurements to iteratively adapt the feed-forward input signal to the system. The novelty of this work is an identification routine that uses a numerical simulation of the system dynamics to extract the required model information. This allows the learning algorithm to be applied to any dynamic system for which a dynamics simulation is available (including systems with underlying feedback loops). The proposed learning algorithm is applied to a quadrocopter system that is guided by a trajectory-following controller. With the identification routine, we are able to extend our previous learning results to three-dimensional quadrocopter motions and achieve significantly higher tracking accuracy due to the underlying feedback control, which accounts for non-repetitive noise.

@INPROCEEDINGS{mueller-iros12,

author = {Fabian L. Mueller and Angela P. Schoellig and Raffaello D'Andrea},

title = {Iterative learning of feed-forward corrections for high-performance tracking},

booktitle = {{Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}},

pages = {3276-3281},

year = {2012},

doi = {10.1109/IROS.2012.6385647},

urlvideo = {https://youtu.be/zHTCsSkmADo?list=PLC12E387419CEAFF2},

urlslides = {../../wp-content/papercite-data/slides/mueller-iros12-slides.pdf},

abstract = {We revisit a recently developed iterative learning algorithm that enables systems to learn from a repeated operation with the goal of achieving high tracking performance of a given trajectory. The learning scheme is based on a coarse dynamics model of the system and uses past measurements to iteratively adapt the feed-forward input signal to the system. The novelty of this work is an identification routine that uses a numerical simulation of the system dynamics to extract the required model information. This allows the learning algorithm to be applied to any dynamic system for which a dynamics simulation is available (including systems with underlying feedback loops). The proposed learning algorithm is applied to a quadrocopter system that is guided by a trajectory-following controller. With the identification routine, we are able to extend our previous learning results to three-dimensional quadrocopter motions and achieve significantly higher tracking accuracy due to the underlying feedback control, which accounts for non-repetitive noise.}

}