Mapping and Localization in Changing Environments

A key underpinning factor for the reliable operation of any autonomous system is the ability to map and localize itself against the map of an environment. Our lab has been pursuing a multimodal approach to localization and mapping leveraging diverse sensing modalities. Our work can be categorized into two streams:

A. Mapping and Localization in Semi-Static Environments: Mapping and localization has traditionally been performed in static environments; however, many realistic environments include slowly-varying objects that may appear or disappear at different times (e.g.,, warehouses or factories undergoing large scenic changes during the day or even a few hours). Taking these changes into account is critical for accurate long-term operation. We have been working on augmenting visual simultaneous localization and mapping (vSLAM) systems with models that capture the dynamic nature of certain objects in the environment.

B. Range-Based Localization: Another line of research leverages the many benefits of range-based localization for localization in dynamic environments. We have explored range-based localization using ultrawideband radio technology, which is inexpensive and can enable fast deployment of robots in new environments. The scope of our project includes modeling different aspects of range-based localization such as placement of range sensors, calibration, sensor fusion with different sensing modalities, and modeling spatial biases to achieve state-of-the-art localization performance.

Related Publications

Wireless indoor localization has attracted significant research interest due to its high accuracy, low cost, lightweight design, and low power consumption. Specifically, ultra-wideband (UWB) time difference of arrival (TDOA)-based localization has emerged as a scalable positioning solution for mobile robots, consumer electronics, and wearable devices, featuring good accuracy and reliability. While UWB TDOA-based localization systems rely on the deployment of UWB radio sensors as positioning landmarks, existing works often assume these placements are predetermined or study the sensor placement problem alone without evaluating it in practical scenarios. In this article, we bridge this gap by approaching the UWB TDOA localization from a system-level perspective, integrating sensor placement as a key component and conducting practical evaluation in real-world scenarios. Through extensive real-world experiments, we demonstrate the accuracy and robustness of our localization system, comparing its performance to the theoretical lower bounds. Using a challenging multi-room environment as a case study, we illustrate the full system construction process, from sensor placement optimization to real-world deployment. Our evaluation, comprising a cumulative total of 39 minutes of real-world experiments involving up to five agents and covering 2608 meters across four distinct scenarios, provides valuable insights and guidelines for constructing UWB TDOA localization systems.

@ARTICLE{zhao-ram24,

title = {Ultra-wideband Time Difference of Arrival Indoor Localization: From

Sensor Placement to System Evaluation},

author = {Wenda Zhao and Abhishek Goudar and Mingliang Tang and Angela P. Schoellig},

journal = {{IEEE Robotics and Automation Magazine}},

note = {Under review},

year = {2024},

urllink = {https://arxiv.org/abs/2412.12427},

abstract = {Wireless indoor localization has attracted significant research

interest due to its high accuracy, low cost, lightweight design,

and low power consumption. Specifically, ultra-wideband (UWB)

time difference of arrival (TDOA)-based localization has emerged

as a scalable positioning solution for mobile robots, consumer

electronics, and wearable devices, featuring good accuracy and

reliability. While UWB TDOA-based localization systems rely on

the deployment of UWB radio sensors as positioning landmarks,

existing works often assume these placements are predetermined or

study the sensor placement problem alone without evaluating it in

practical scenarios. In this article, we bridge this gap by

approaching the UWB TDOA localization from a system-level

perspective, integrating sensor placement as a key component and

conducting practical evaluation in real-world scenarios. Through

extensive real-world experiments, we demonstrate the accuracy and

robustness of our localization system, comparing its performance

to the theoretical lower bounds. Using a challenging multi-room

environment as a case study, we illustrate the full system

construction process, from sensor placement optimization to

real-world deployment. Our evaluation, comprising a cumulative

total of 39 minutes of real-world experiments involving up to

five agents and covering 2608 meters across four distinct

scenarios, provides valuable insights and guidelines for

constructing UWB TDOA localization systems.}

} ![]() Optimal initialization strategies for range-only trajectory estimationA. Goudar, F. Dümbgen, T. D. Barfoot, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 9, iss. 3, p. 2160–2167, 2024.

Optimal initialization strategies for range-only trajectory estimationA. Goudar, F. Dümbgen, T. D. Barfoot, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 9, iss. 3, p. 2160–2167, 2024.

![]()

![]()

![]()

Range-only (RO) pose estimation involves determining a robot’s pose over time by measuring the distance between multiple devices on the robot, known as tags, and devices installed in the environment, known as anchors. The non-convex nature of the range measurement model results in a cost function with possible local minima. In the absence of a good initial guess, commonly used iterative solvers can get stuck in these local minima resulting in poor trajectory estimation accuracy. In this letter, we propose convex relaxations to the original non-convex problem based on semidefinite programs (SDPs). Specifically, we formulate computationally tractable SDP relaxations to obtain accurate initial pose and trajectory estimates for RO trajectory estimation under static and dynamic (i.e., constant-velocity motion) conditions. Through simulation and hardware experiments, we demonstrate that our proposed approaches estimate the initial pose and initial trajectories accurately compared to iterative local solvers. Additionally, the proposed relaxations recover global minima under moderate range measurement noise levels.

@ARTICLE{goudar-ral24b,

author={Abhishek Goudar and Frederike D{\"u}mbgen and Timothy D. Barfoot and Angela P. Schoellig},

journal={{IEEE Robotics and Automation Letters}},

title={Optimal Initialization Strategies for Range-Only Trajectory Estimation},

year={2024},

volume={9},

number={3},

pages={2160--2167},

doi={10.1109/LRA.2024.3354623},

abstract={Range-only (RO) pose estimation involves determining a robot's pose over time by measuring the distance between multiple devices on the robot, known as tags, and devices installed in the environment, known as anchors. The non-convex nature of the range measurement model results in a cost function with possible local minima. In the absence of a good initial guess, commonly used iterative solvers can get stuck in these local minima resulting in poor trajectory estimation accuracy. In this letter, we propose convex relaxations to the original non-convex problem based on semidefinite programs (SDPs). Specifically, we formulate computationally tractable SDP relaxations to obtain accurate initial pose and trajectory estimates for RO trajectory estimation under static and dynamic (i.e., constant-velocity motion) conditions. Through simulation and hardware experiments, we demonstrate that our proposed approaches estimate the initial pose and initial trajectories accurately compared to iterative local solvers. Additionally, the proposed relaxations recover global minima under moderate range measurement noise levels.}

} ![]() Range-visual-inertial sensor fusion for micro aerial vehicle localization and navigationA. Goudar, W. Zhao, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 9, iss. 1, p. 683–690, 2024.

Range-visual-inertial sensor fusion for micro aerial vehicle localization and navigationA. Goudar, W. Zhao, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 9, iss. 1, p. 683–690, 2024.

![]()

![]()

![]()

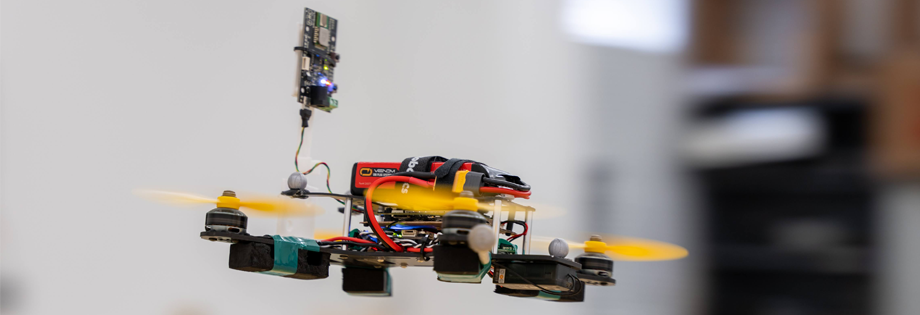

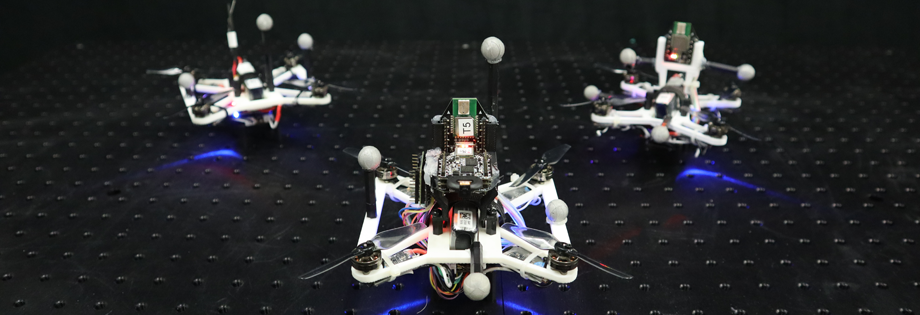

We propose a fixed-lag smoother-based sensor fusion architecture to leverage the complementary benefits of range-based sensors and visual-inertial odometry (VIO) for localization. We use two fixed-lag smoothers (FLS) to decouple accurate state estimation and high-rate pose generation for closed-loop control. The first FLS combines ultrawideband (UWB)-based range measurements and VIO to estimate the robot trajectory and any systematic biases that affect the range measurements in cluttered environments. The second FLS estimates smooth corrections to VIO to generate pose estimates at a high rate for online control. The proposed method is lightweight and can run on a computationally constrained micro-aerial vehicle (MAV). We validate our approach through closed-loop flight tests involving dynamic trajectories in multiple real-world cluttered indoor environments. Our method achieves decimeter-to-sub-decimeter-level positioning accuracy using off-the-shelf sensors and decimeter-level tracking accuracy with minimally-tuned open-source controllers.

@article{goudar-ral24,

author={Abhishek Goudar and Wenda Zhao and Angela P. Schoellig},

journal={{IEEE Robotics and Automation Letters}},

title={Range-Visual-Inertial Sensor Fusion for Micro Aerial Vehicle Localization and Navigation},

year={2024},

volume={9},

number={1},

pages={683--690},

doi={10.1109/LRA.2023.3335772},

abstract={We propose a fixed-lag smoother-based sensor fusion architecture to leverage the complementary benefits of range-based sensors and visual-inertial odometry (VIO) for localization. We use two fixed-lag smoothers (FLS) to decouple accurate state estimation and high-rate pose generation for closed-loop control. The first FLS combines ultrawideband (UWB)-based range measurements and VIO to estimate the robot trajectory and any systematic biases that affect the range measurements in cluttered environments. The second FLS estimates smooth corrections to VIO to generate pose estimates at a high rate for online control. The proposed method is lightweight and can run on a computationally constrained micro-aerial vehicle (MAV). We validate our approach through closed-loop flight tests involving dynamic trajectories in multiple real-world cluttered indoor environments. Our method achieves decimeter-to-sub-decimeter-level positioning accuracy using off-the-shelf sensors and decimeter-level tracking accuracy with minimally-tuned open-source controllers.}

} ![]() UTIL: an ultra-wideband time-difference-of-arrival indoor localization datasetW. Zhao, A. Goudar, X. Qiao, and A. P. SchoelligInternational Journal of Robotics Research, 2024. In press.

UTIL: an ultra-wideband time-difference-of-arrival indoor localization datasetW. Zhao, A. Goudar, X. Qiao, and A. P. SchoelligInternational Journal of Robotics Research, 2024. In press.

![]()

![]()

![]()

![]()

![]()

Ultra-wideband (UWB) time-difference-of-arrival (TDOA)-based localization has emerged as a promising, low-cost, and scalable indoor localization solution, which is especially suited for multi-robot applications. However, there is a lack of public datasets to study and benchmark UWB TDOA positioning technology in cluttered indoor environments. We fill in this gap by presenting a comprehensive dataset using Decawave’s DWM1000 UWB modules. To characterize the UWB TDOA measurement performance under various line-of-sight (LOS) and non-line-of-sight (NLOS) conditions, we collected signal-to-noise ratio (SNR), power difference values, and raw UWB TDOA measurements during the identification experiments. We also conducted a cumulative total of around 150 min of real-world flight experiments on a customized quadrotor platform to benchmark the UWB TDOA localization performance for mobile robots. The quadrotor was commanded to fly with an average speed of 0.45 m/s in both obstacle-free and cluttered environments using four different UWB anchor constellations. Raw sensor data including UWB TDOA, inertial measurement unit (IMU), optical flow, time-of-flight (ToF) laser altitude, and millimeter-accurate ground truth robot poses were collected during the flights. The dataset and development kit are available at https://utiasdsl.github.io/util-uwb-dataset/.

@article{zhao-ijrr24,

author = {Wenda Zhao and Abhishek Goudar and Xinyuan Qiao and Angela P. Schoellig},

title = {{UTIL}: An ultra-wideband time-difference-of-arrival indoor localization dataset},

journal = {{International Journal of Robotics Research}},

year = {2024},

volume = {},

number = {},

pages = {},

note = {In press},

urllink = {https://journals.sagepub.com/doi/full/10.1177/02783649241230640},

urlcode = {https://github.com/utiasDSL/util-uwb-dataset},

doi = {10.1177/02783649241230640},

abstract = {Ultra-wideband (UWB) time-difference-of-arrival (TDOA)-based localization has emerged as a promising, low-cost, and scalable indoor localization solution, which is especially suited for multi-robot applications. However, there is a lack of public datasets to study and benchmark UWB TDOA positioning technology in cluttered indoor environments. We fill in this gap by presenting a comprehensive dataset using Decawave’s DWM1000 UWB modules. To characterize the UWB TDOA measurement performance under various line-of-sight (LOS) and non-line-of-sight (NLOS) conditions, we collected signal-to-noise ratio (SNR), power difference values, and raw UWB TDOA measurements during the identification experiments. We also conducted a cumulative total of around 150 min of real-world flight experiments on a customized quadrotor platform to benchmark the UWB TDOA localization performance for mobile robots. The quadrotor was commanded to fly with an average speed of 0.45 m/s in both obstacle-free and cluttered environments using four different UWB anchor constellations. Raw sensor data including UWB TDOA, inertial measurement unit (IMU), optical flow, time-of-flight (ToF) laser altitude, and millimeter-accurate ground truth robot poses were collected during the flights. The dataset and development kit are available at https://utiasdsl.github.io/util-uwb-dataset/.}

} Closing the perception-action loop for semantically safe navigation in semi-static environmentsJ. Qian, S. Zhou, N. J. Ren, V. Chatrath, and A. P. Schoelligin Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2024. Accepted.

![]()

![]()

![]()

Autonomous robots navigating in changing environments demand adaptive navigation strategies for safe long-term operation. While many modern control paradigms offer theoretical guarantees, they often assume known extrinsic safety constraints, overlooking challenges when deployed in real-world environments where objects can appear, disappear, and shift over time. In this paper, we present a closed-loop perception-action pipeline that bridges this gap. Our system encodes an online-constructed dense map, along with object-level semantic and consistency estimates into a control barrier function (CBF) to regulate safe regions in the scene. A model predictive controller (MPC) leverages the CBF-based safety constraints to adapt its navigation behaviour, which is particularly crucial when potential scene changes occur. We test the system in simulations and real-world experiments to demonstrate the impact of semantic information and scene change handling on robot behavior, validating the practicality of our approach.

@inproceedings{qian-icra24,

author={Jingxing Qian and Siqi Zhou and Nicholas Jianrui Ren and Veronica Chatrath and Angela P. Schoellig},

booktitle = {{Proc. of the IEEE International Conference on Robotics and Automation (ICRA)}},

title={Closing the Perception-Action Loop for Semantically Safe Navigation in Semi-Static Environments},

year={2024},

note={Accepted},

abstract = {Autonomous robots navigating in changing environments demand adaptive navigation strategies for safe long-term operation. While many modern control paradigms offer theoretical guarantees, they often assume known extrinsic safety constraints, overlooking challenges when deployed in real-world environments where objects can appear, disappear, and shift over time. In this paper, we present a closed-loop perception-action pipeline that bridges this gap. Our system encodes an online-constructed dense map, along with object-level semantic and consistency estimates into a control barrier function (CBF) to regulate safe regions in the scene. A model predictive controller (MPC) leverages the CBF-based safety constraints to adapt its navigation behaviour, which is particularly crucial when potential scene changes occur. We test the system in simulations and real-world experiments to demonstrate the impact of semantic information and scene change handling on robot behavior, validating the practicality of our approach.}

} Uncertainty-aware 3D object-level mapping with deep shape priorsZ. Liao, J. Yang, J. Qian, A. P. Schoellig, and S. L. Waslanderin Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 2024. Accepted.

![]()

![]()

![]()

3D object-level mapping is a fundamental problem in robotics, which is especially challenging when object CAD models are unavailable during inference. In this work, we propose a framework that can reconstruct high-quality object-level maps for unknown objects. Our approach takes multiple RGB-D images as input and outputs dense 3D shapes and 9-DoF poses (including 3 scale parameters) for detected objects. The core idea of our approach is to leverage a learnt generative model for shape categories as a prior and to formulate a probabilistic, uncertainty-aware optimization framework for 3D reconstruction. We derive a probabilistic formulation that propagates shape and pose uncertainty through two novel loss functions. Unlike current state-of-the-art approaches, we explicitly model the uncertainty of the object shapes and poses during our optimization, resulting in a high-quality object-level mapping system. Moreover, the resulting shape and pose uncertainties, which we demonstrate can accurately reflect the true errors of our object maps, can also be useful for downstream robotics tasks such as active vision. We perform extensive evaluations on indoor and outdoor real-world datasets, achieving achieves substantial improvements over state-of-the-art methods. Our code will be available at https://github.com/TRAILab/UncertainShapePose

@inproceedings{liao-icra24,

author={Ziwei Liao and Jun Yang and Jingxing Qian and Angela P. Schoellig and Steven L. Waslander},

booktitle = {{Proc. of the IEEE International Conference on Robotics and Automation (ICRA)}},

title={Uncertainty-aware {3D} Object-Level Mapping with Deep Shape Priors},

year={2024},

note={Accepted},

abstract = {3D object-level mapping is a fundamental problem in robotics, which is especially challenging when object CAD models are unavailable during inference. In this work, we propose a framework that can reconstruct high-quality object-level maps for unknown objects. Our approach takes multiple RGB-D images as input and outputs dense 3D shapes and 9-DoF poses (including 3 scale parameters) for detected objects. The core idea of our approach is to leverage a learnt generative model for shape categories as a prior and to formulate a probabilistic, uncertainty-aware optimization framework for 3D reconstruction. We derive a probabilistic formulation that propagates shape and pose uncertainty through two novel loss functions. Unlike current state-of-the-art approaches, we explicitly model the uncertainty of the object shapes and poses during our optimization, resulting in a high-quality object-level mapping system. Moreover, the resulting shape and pose uncertainties, which we demonstrate can accurately reflect the true errors of our object maps, can also be useful for downstream robotics tasks such as active vision. We perform extensive evaluations on indoor and outdoor real-world datasets, achieving achieves substantial improvements over state-of-the-art methods. Our code will be available at https://github.com/TRAILab/UncertainShapePose}

} ![]() POV-SLAM: probabilistic object-aware variational SLAM in semi-static environmentsJ. Qian, V. Chatrath, J. Servos, A. Mavrinac, W. Burgard, S. L. Waslander, and A. P. Schoelligin Proc. of Robotics: Science and Systems, 2023.

POV-SLAM: probabilistic object-aware variational SLAM in semi-static environmentsJ. Qian, V. Chatrath, J. Servos, A. Mavrinac, W. Burgard, S. L. Waslander, and A. P. Schoelligin Proc. of Robotics: Science and Systems, 2023.

![]()

![]()

![]()

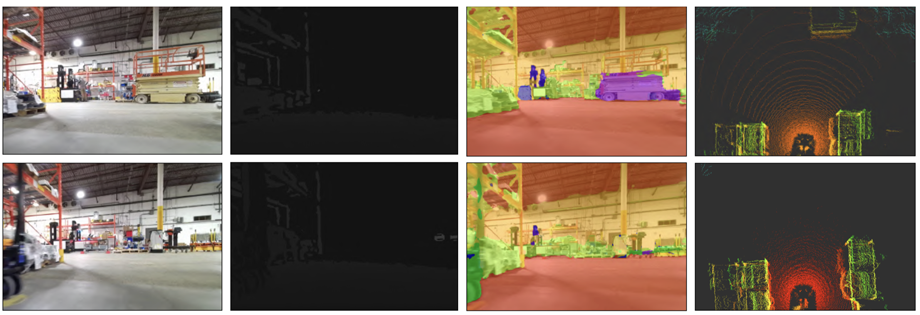

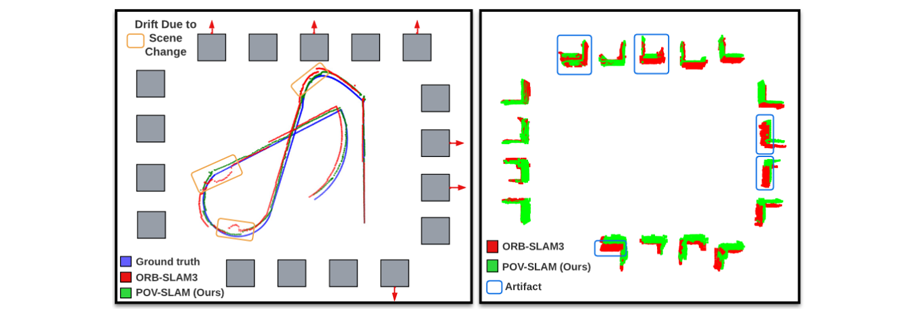

Simultaneous localization and mapping (SLAM) in slowly varying scenes is important for long-term robot task completion in GPS-denied environments. Failing to detect scene changes may lead to inaccurate maps and, ultimately, lost robots. Classical SLAM algorithms assume static scenes, and recent works take dynamics into account, but require scene changes to be observed in consecutive frames. Semi-static scenes, wherein objects appear, disappear, or move slowly over time, are often overlooked, yet are critical for long-term operation. We propose an object-aware, factor-graph SLAM framework that tracks and reconstructs semi-static object-level changes. Our novel variational expectation-maximization strategy is used to optimize factor graphs involving a Gaussian-Uniform bimodal measurement likelihood for potentially-changing objects. We evaluate our approach alongside the state-of-the-art SLAM solutions in simulation and on our novel real-world SLAM dataset captured in a warehouse over four months. Our method improves the robustness of localization in the presence of semi-static changes, providing object-level reasoning about the scene.

@inproceedings{qian-rss23,

author = {Jingxing Qian and Veronica Chatrath and James Servos and Aaron Mavrinac and Wolfram Burgard and Steven L. Waslander and Angela P. Schoellig},

title = {{POV-SLAM}: Probabilistic Object-Aware Variational {SLAM} in Semi-Static Environments},

booktitle = {{Proc. of Robotics: Science and Systems}},

year = {2023},

doi = {10.15607/RSS.2023.XIX.069},

abstract = {Simultaneous localization and mapping (SLAM) in slowly varying scenes is important for long-term robot task completion in GPS-denied environments. Failing to detect scene changes may lead to inaccurate maps and, ultimately, lost robots. Classical SLAM algorithms assume static scenes, and recent works take dynamics into account, but require scene changes to be observed in consecutive frames. Semi-static scenes, wherein objects appear, disappear, or move slowly over time, are often overlooked, yet are critical for long-term operation. We propose an object-aware, factor-graph SLAM framework that tracks and reconstructs semi-static object-level changes. Our novel variational expectation-maximization strategy is used to optimize factor graphs involving a Gaussian-Uniform bimodal measurement likelihood for potentially-changing objects. We evaluate our approach alongside the state-of-the-art SLAM solutions in simulation and on our novel real-world SLAM dataset captured in a warehouse over four months. Our method improves the robustness of localization in the presence of semi-static changes, providing object-level reasoning about the scene.}

} ![]() Uncertainty-aware gaussian mixture model for uwb time difference of arrival localization in cluttered environmentsW. Zhao, A. Goudar, M. Tang, X. Qiao, and A. P. Schoelligin Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023, p. 5266–5273.

Uncertainty-aware gaussian mixture model for uwb time difference of arrival localization in cluttered environmentsW. Zhao, A. Goudar, M. Tang, X. Qiao, and A. P. Schoelligin Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023, p. 5266–5273.

![]()

![]()

![]()

![]()

Ultra-wideband (UWB) time difference of arrival (TDOA)-based localization has emerged as a low-cost and scalable indoor positioning solution. However, in cluttered environments, the performance of UWB TDOA-based localization deteriorates due to the biased and non-Gaussian noise distributions induced by obstacles. In this work, we present a bi-level optimization-based joint localization and noise model learning algorithm to address this problem. In particular, we use a Gaussian mixture model (GMM) to approximate the measurement noise distribution. We explicitly incorporate the estimated state’s uncertainty into the GMM noise model learning, referred to as uncertainty-aware GMM, to improve both noise modeling and localization performance. We first evaluate the GMM noise model learning and localization performance in numerous simulation scenarios. We then demonstrate the effectiveness of our algorithm in extensive real-world experiments using two different cluttered environments. We show that our algorithm provides accurate position estimates with low-cost UWB sensors, no prior knowledge about the obstacles in the space, and a significant amount of UWB radios occluded.

@INPROCEEDINGS{zhao-iros23,

author={Wenda Zhao and Abhishek Goudar and Mingliang Tang and Xinyuan Qiao and Angela P. Schoellig},

booktitle = {{Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}},

title={Uncertainty-aware Gaussian Mixture Model for UWB Time Difference of Arrival Localization in Cluttered Environments},

year={2023},

pages={5266--5273},

doi={10.1109/IROS55552.2023.10342365},

urllink={https://ieeexplore.ieee.org/document/10342365},

abstract = {Ultra-wideband (UWB) time difference of arrival (TDOA)-based localization has emerged as a low-cost and scalable indoor positioning solution. However, in cluttered environments, the performance of UWB TDOA-based localization deteriorates due to the biased and non-Gaussian noise distributions induced by obstacles. In this work, we present a bi-level optimization-based joint localization and noise model learning algorithm to address this problem. In particular, we use a Gaussian mixture model (GMM) to approximate the measurement noise distribution. We explicitly incorporate the estimated state’s uncertainty into the GMM noise model learning, referred to as uncertainty-aware GMM, to improve both noise modeling and localization performance. We first evaluate the GMM noise model learning and localization performance in numerous simulation scenarios. We then demonstrate the effectiveness of our algorithm in extensive real-world experiments using two different cluttered environments. We show that our algorithm provides accurate position estimates with low-cost UWB sensors, no prior knowledge about the obstacles in the space, and a significant amount of UWB radios occluded.}

} ![]() Continuous-time range-only pose estimationA. Goudar, T. D. Barfoot, and A. P. Schoelligin Proc. of the Conference on Robots and Vision (CRV), 2023, p. 29–36.

Continuous-time range-only pose estimationA. Goudar, T. D. Barfoot, and A. P. Schoelligin Proc. of the Conference on Robots and Vision (CRV), 2023, p. 29–36.

![]()

![]()

![]()

![]()

Range-only (RO) localization involves determining the position of a mobile robot by measuring the distance to specific anchors. RO localization is challenging since the measurements are low-dimensional and a single range sensor does not have enough information to estimate the full pose of the robot. As such, range sensors are typically coupled with other sensing modalities such as wheel encoders or inertial measurement units (IMUs) to estimate the full pose. In this work, we propose a continuous-time Gaussian process (GP)-based trajectory estimation method to estimate the full pose of a robot using only range measurements from multiple range sensors. Results from simulation and real experiments show that our proposed method, using off-the-shelf range sensors, is able to achieve comparable performance and in some cases outperform alternative state-of-the-art sensor-fusion methods that use additional sensing modalities.

@INPROCEEDINGS{goudar-crv23,

author={Abhishek Goudar and Timothy D. Barfoot and Angela P. Schoellig},

booktitle = {{Proc. of the Conference on Robots and Vision (CRV)}},

title={Continuous-Time Range-Only Pose Estimation},

year={2023},

pages={29--36},

doi={10.1109/CRV60082.2023.00012},

urllink = {https://arxiv.org/abs/2304.09043},

abstract = {Range-only (RO) localization involves determining the position of a mobile robot by measuring the distance to specific anchors. RO localization is challenging since the measurements are low-dimensional and a single range sensor does not have enough information to estimate the full pose of the robot. As such, range sensors are typically coupled with other sensing modalities such as wheel encoders or inertial measurement units (IMUs) to estimate the full pose. In this work, we propose a continuous-time Gaussian process (GP)-based trajectory estimation method to estimate the full pose of a robot using only range measurements from multiple range sensors. Results from simulation and real experiments show that our proposed method, using off-the-shelf range sensors, is able to achieve comparable performance and in some cases outperform alternative state-of-the-art sensor-fusion methods that use additional sensing modalities.}

} ![]() Finding the right place: sensor placement for UWB time difference of arrival localization in cluttered indoor environmentsW. Zhao, A. Goudar, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 7, iss. 3, p. 6075–6082, 2022.

Finding the right place: sensor placement for UWB time difference of arrival localization in cluttered indoor environmentsW. Zhao, A. Goudar, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 7, iss. 3, p. 6075–6082, 2022.

![]()

![]()

![]()

Ultra-wideband (UWB) time difference of arrival (TDOA)-based localization has recently emerged as a promising indoor positioning solution. However, in cluttered environments, both the UWB radio positions and the obstacle-induced nonline-of-sight (NLOS) measurement biases significantly impact the quality of the position estimate. Consequently, the placement of the UWB radios must be carefully designed to provide satisfactory localization accuracy for a region of interest. In this work, we propose a novel algorithm that optimizes the UWB radio positions for a pre-defined region of interest in the presence of obstacles. The mean-squared error (MSE) metric is used to formulate an optimization problem that balances the influence of the geometry of the radio positions and the NLOS effects. We further apply the proposed algorithm to compute a minimal number of UWB radios required for a desired localization accuracy and their corresponding positions. In a realworld cluttered environment, we show that the designed UWB radio placements provide 47\% and 76\% localization root-meansquared error (RMSE) reduction in 2D and 3D experiments, respectively, when compared against trivial placements.

@article{zhao-ral22-arxiv,

author = {Wenda Zhao and Abhishek Goudar and Angela P. Schoellig},

title = {Finding the Right Place: Sensor Placement for {UWB} Time Difference of Arrival Localization in Cluttered Indoor Environments},

journal = {{IEEE Robotics and Automation Letters}},

year={2022},

volume={7},

number={3},

pages={6075--6082},

doi={10.1109/LRA.2022.3165181},

abstract = {Ultra-wideband (UWB) time difference of arrival (TDOA)-based localization has recently emerged as a promising indoor positioning solution. However, in cluttered environments, both the UWB radio positions and the obstacle-induced nonline-of-sight (NLOS) measurement biases significantly impact the quality of the position estimate. Consequently, the placement of the UWB radios must be carefully designed to provide satisfactory localization accuracy for a region of interest. In this work, we propose a novel algorithm that optimizes the UWB radio positions for a pre-defined region of interest in the presence of obstacles. The mean-squared error (MSE) metric is used to formulate an optimization problem that balances the influence of the geometry of the radio positions and the NLOS effects. We further apply the proposed algorithm to compute a minimal number of UWB radios required for a desired localization accuracy and their corresponding positions. In a realworld cluttered environment, we show that the designed UWB radio placements provide 47\% and 76\% localization root-meansquared error (RMSE) reduction in 2D and 3D experiments, respectively, when compared against trivial placements.}

} ![]() Gaussian variational inference with covariance constraints applied to range-only localizationA. Goudar, W. Zhao, T. D. Barfoot, and A. P. Schoelligin Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, p. 2872–2879.

Gaussian variational inference with covariance constraints applied to range-only localizationA. Goudar, W. Zhao, T. D. Barfoot, and A. P. Schoelligin Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022, p. 2872–2879.

![]()

![]()

![]()

Accurate and reliable state estimation is becoming increasingly important as robots venture into the real world. Gaussian variational inference (GVI) is a promising alternative for nonlinear state estimation, which estimates a full probability density for the posterior instead of a point estimate as in maximum a posteriori (MAP)-based approaches. GVI works by optimizing for the parameters of a multivariate Gaussian (MVG) that best agree with the observed data. However, such an optimization procedure must ensure the parameter constraints of a MVG are satisfied; in particular, the inverse covariance matrix must be positive definite. In this work, we propose a tractable algorithm for performing state estimation using GVI that guarantees that the inverse covariance matrix remains positive definite and is well-conditioned throughout the optimization procedure. We evaluate our method extensively in both simulation and real-world experiments for range-only localization. Our results show GVI is consistent on this problem, while MAP is over-confident.

@INPROCEEDINGS{goudar-iros22,

author={Abhishek Goudar and Wenda Zhao and Timothy D. Barfoot and Angela P. Schoellig},

booktitle = {{Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}},

title={Gaussian Variational Inference with Covariance Constraints Applied to Range-only Localization},

year={2022},

pages={2872--2879},

doi={10.1109/IROS47612.2022.9981520},

abstract = {Accurate and reliable state estimation is becoming increasingly important as robots venture into the real world. Gaussian variational inference (GVI) is a promising alternative for nonlinear state estimation, which estimates a full probability density for the posterior instead of a point estimate as in maximum a posteriori (MAP)-based approaches. GVI works by optimizing for the parameters of a multivariate Gaussian (MVG) that best agree with the observed data. However, such an optimization procedure must ensure the parameter constraints of a MVG are satisfied; in particular, the inverse covariance matrix must be positive definite. In this work, we propose a tractable algorithm for performing state estimation using GVI that guarantees that the inverse covariance matrix remains positive definite and is well-conditioned throughout the optimization procedure. We evaluate our method extensively in both simulation and real-world experiments for range-only localization. Our results show GVI is consistent on this problem, while MAP is over-confident.}

} ![]() POCD: probabilistic object-level change detection and volumetric mapping in semi-static scenesJ. Qian, V. Chatrath, J. Yang, J. Servos, A. Schoellig, and S. L. Waslanderin Proc. of Robotics: Science and Systems (RSS), 2022.

POCD: probabilistic object-level change detection and volumetric mapping in semi-static scenesJ. Qian, V. Chatrath, J. Yang, J. Servos, A. Schoellig, and S. L. Waslanderin Proc. of Robotics: Science and Systems (RSS), 2022.

![]()

![]()

![]()

Maintaining an up-to-date map to reflect recent changes in the scene is very important, particularly in situations involving repeated traversals by a robot operating in an environment over an extended period. Undetected changes may cause a deterioration in map quality, leading to poor localization, inefficient operations, and lost robots. Volumetric methods, such as truncated signed distance functions (TSDFs), have quickly gained traction due to their real-time production of a dense and detailed map, though map updating in scenes that change over time remains a challenge. We propose a framework that introduces a novel probabilistic object state representation to track object pose changes in semi-static scenes. The representation jointly models a stationarity score and a TSDF change measure for each object. A Bayesian update rule that incorporates both geometric and semantic information is derived to achieve consistent online map maintenance. To extensively evaluate our approach alongside the state-of-the-art, we release a novel real-world dataset in a warehouse environment. We also evaluate on the public ToyCar dataset. Our method outperforms state-of-the-art methods on the reconstruction quality of semi-static environments.

@INPROCEEDINGS{qian-rss22,

author={Jingxing Qian and Veronica Chatrath and Jun Yang and James Servos and Angela Schoellig and Steven L. Waslander},

booktitle={{Proc. of Robotics: Science and Systems (RSS)}},

title={{POCD}: Probabilistic Object-Level Change Detection and Volumetric Mapping in Semi-Static Scenes},

year={2022},

doi={10.15607/RSS.2022.XVIII.013},

abstract={Maintaining an up-to-date map to reflect recent changes in the scene is very important, particularly in situations involving repeated traversals by a robot operating in an environment over an extended period. Undetected changes may cause a deterioration in map quality, leading to poor localization, inefficient operations, and lost robots. Volumetric methods, such as truncated signed distance functions (TSDFs), have quickly gained traction due to their real-time production of a dense and detailed map, though map updating in scenes that change over time remains a challenge. We propose a framework that introduces a novel probabilistic object state representation to track object pose changes in semi-static scenes. The representation jointly models a stationarity score and a TSDF change measure for each object. A Bayesian update rule that incorporates both geometric and semantic information is derived to achieve consistent online map maintenance. To extensively evaluate our approach alongside the state-of-the-art, we release a novel real-world dataset in a warehouse environment. We also evaluate on the public ToyCar dataset. Our method outperforms state-of-the-art methods on the reconstruction quality of semi-static environments.}

} ![]() Learning-based bias correction for time difference of arrival ultra-wideband localization of resource-constrained mobile robotsW. Zhao, J. Panerati, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 6, iss. 2, p. 3639–3646, 2021.

Learning-based bias correction for time difference of arrival ultra-wideband localization of resource-constrained mobile robotsW. Zhao, J. Panerati, and A. P. SchoelligIEEE Robotics and Automation Letters, vol. 6, iss. 2, p. 3639–3646, 2021.

![]()

![]()

![]()

![]()

![]()

Accurate indoor localization is a crucial enabling technology for many robotics applications, from warehouse management to monitoring tasks. Ultra-wideband (UWB) time difference of arrival (TDOA)-based localization is a promising lightweight, low-cost solution that can scale to a large number of devices—making it especially suited for resource-constrained multi-robot applications. However, the localization accuracy of standard, commercially available UWB radios is often insufficient due to significant measurement bias and outliers. In this letter, we address these issues by proposing a robust UWB TDOA localization framework comprising of (i) learning-based bias correction and (ii) M-estimation-based robust filtering to handle outliers. The key properties of our approach are that (i) the learned biases generalize to different UWB anchor setups and (ii) the approach is computationally efficient enough to run on resource-constrained hardware. We demonstrate our approach on a Crazyflie nano-quadcopter. Experimental results show that the proposed localization framework, relying only on the onboard IMU and UWB, provides an average of 42.08\% localization error reduction (in three different anchor setups) compared to the baseline approach without bias compensation. We also show autonomous trajectory tracking on a quadcopter using our UWB TDOA localization approach.

@article{zhao-ral21,

title = {Learning-based Bias Correction for Time Difference of Arrival Ultra-wideband Localization of Resource-constrained Mobile Robots},

author = {Wenda Zhao and Jacopo Panerati and Angela P. Schoellig},

journal = {{IEEE Robotics and Automation Letters}},

year = {2021},

volume = {6},

number = {2},

pages = {3639--3646},

doi = {10.1109/LRA.2021.3064199},

urlvideo = {https://youtu.be/J32mrDN5ws4},

urllink = {https://ieeexplore.ieee.org/document/9372785},

abstract = {Accurate indoor localization is a crucial enabling technology for many robotics applications, from warehouse management to monitoring tasks. Ultra-wideband (UWB) time difference of arrival (TDOA)-based localization is a promising lightweight, low-cost solution that can scale to a large number of devices—making it especially suited for resource-constrained multi-robot applications. However, the localization accuracy of standard, commercially available UWB radios is often insufficient

due to significant measurement bias and outliers. In this letter, we address these issues by proposing a robust UWB TDOA localization framework comprising of (i) learning-based bias correction and (ii) M-estimation-based robust filtering to handle outliers. The key properties of our approach are that (i) the learned biases generalize to different UWB anchor setups and (ii) the approach is computationally efficient enough to run on resource-constrained hardware. We demonstrate our approach on a Crazyflie nano-quadcopter. Experimental results show that the proposed localization framework, relying only on the onboard IMU and UWB, provides an average of 42.08\% localization error reduction (in three different anchor setups) compared to the baseline approach without bias compensation. We also show autonomous trajectory tracking on a quadcopter using our UWB TDOA localization approach.}

} ![]() Online spatio-temporal calibration of tightly-coupled ultrawideband-aided inertial localizationA. Goudar and A. P. Schoelligin Proc. of the IEEE International Conference on Intelligent Robots and Systems (IROS), 2021, p. 1161–1168.

Online spatio-temporal calibration of tightly-coupled ultrawideband-aided inertial localizationA. Goudar and A. P. Schoelligin Proc. of the IEEE International Conference on Intelligent Robots and Systems (IROS), 2021, p. 1161–1168.

![]()

![]()

![]()

The combination of ultrawideband (UWB) radios and inertial measurement units (IMU) can provide accurate positioning in environments where the Global Positioning System (GPS) service is either unavailable or has unsatisfactory performance. The two sensors, IMU and UWB radio, are often not co-located on a moving system. The UWB radio is typically located at the extremities of the system to ensure reliable communication, whereas the IMUs are located closer to its center of gravity. Furthermore, without hardware or software synchronization, data from heterogeneous sensors can arrive at different time instants resulting in temporal offsets. If uncalibrated, these spatial and temporal offsets can degrade the positioning performance. In this paper, using observability and identifiability criteria, we derive the conditions required for successfully calibrating the spatial and the temporal offset parameters of a tightly-coupled UWB-IMU system. We also present an online method for jointly calibrating these offsets. The results show that our calibration approach results in improved positioning accuracy while simultaneously estimating (i) the spatial offset parameters to millimeter precision and (ii) the temporal offset parameter to millisecond precision.

@INPROCEEDINGS{goudar-iros21,

author = {Abhishek Goudar and Angela P. Schoellig},

title = {Online Spatio-temporal Calibration of Tightly-coupled Ultrawideband-aided Inertial Localization},

booktitle = {{Proc. of the IEEE International Conference on Intelligent Robots and Systems (IROS)}},

year = {2021},

pages = {1161--1168},

doi = {10.1109/IROS51168.2021.9636625},

abstract = {The combination of ultrawideband (UWB) radios and inertial measurement units (IMU) can provide accurate positioning in environments where the Global Positioning System (GPS) service is either unavailable or has unsatisfactory performance. The two sensors, IMU and UWB radio, are often not co-located on a moving system. The UWB radio is typically located at the extremities of the system to ensure reliable communication, whereas the IMUs are located closer to its center of gravity. Furthermore, without hardware or software synchronization, data from heterogeneous sensors can arrive at different time instants resulting in temporal offsets. If uncalibrated, these spatial and temporal offsets can degrade the positioning performance. In this paper, using observability and identifiability criteria, we derive the conditions required for successfully calibrating the spatial and the temporal offset parameters of a tightly-coupled UWB-IMU system. We also present an online method for jointly calibrating these offsets. The results show that our calibration approach results in improved positioning accuracy while simultaneously estimating (i) the spatial offset parameters to millimeter precision and (ii) the temporal offset parameter to millisecond precision.},

} Optimal geometry for ultra-wideband localization using Bayesian optimizationW. Zhao, M. Vukosavljev, and A. P. Schoelligin Proc. of the International Federation of Automatic Control (IFAC) World Congress, 2020, p. 15481–15488.

![]()

![]()

![]()

![]()

This paper introduces a novel algorithm to find a geometric configuration of ultrawideband sources in order to provide optimal position estimation performance with TimeDifference-of-Arrival measurements. Different from existing works, we aim to achieve the best localization performance for a user-defined region of interest instead of a single target point. We employ an analysis based on the Cramer-Rao lower bound and dilution of precision to formulate an optimization problem. A Bayesian optimization-based algorithm is proposed to find an optimal geometry that achieves the smallest estimation variance upper bound while ensuring source placement constraints. The approach is validated through simulation and experimental results in 2D scenarios, showing an improvement over a naive source placement.

@INPROCEEDINGS{zhao-ifac20,

author = {Wenda Zhao and Marijan Vukosavljev and Angela P. Schoellig},

title = {Optimal Geometry for Ultra-wideband Localization using {Bayesian} Optimization},

booktitle = {{Proc. of the International Federation of Automatic Control (IFAC) World Congress}},

year = {2020},

volume = {53},

number = {2},

pages = {15481--15488},

urlvideo = {https://youtu.be/5mqKOfWpEWc},

abstract = {This paper introduces a novel algorithm to find a geometric configuration of ultrawideband sources in order to provide optimal position estimation performance with TimeDifference-of-Arrival measurements. Different from existing works, we aim to achieve the best localization performance for a user-defined region of interest instead of a single target point. We employ an analysis based on the Cramer-Rao lower bound and dilution of precision to formulate an optimization problem. A Bayesian optimization-based algorithm is proposed to find an optimal geometry that achieves the smallest estimation variance upper bound while ensuring source placement constraints. The approach is validated through simulation and experimental results in 2D scenarios, showing an improvement over a naive source placement.},

}